Maximizing Performance with Client-Side Throttling Techniques

In this blog post, we are going to cover the concept of API throttling from a client-side perspective, starting with understanding why we need rate limiting and throttling in the first place as well as discussing the need for client-side throttling in particular. Then, we will move on to review a few common throttling algorithms you can implement according to your needs.

Backend Throttling

Rate limiting and throttling of APIs are key concepts for designing resilient systems. We’re mostly aware of these terms from the backend side. Depending on popularity and need, every API is provisioned on a certain number of servers to meet the incoming traffic demand. Any number of requests (too many or too fast) greater than the maximum server capacity could lead to underperforming and lagging SLA of the API provider to its consumers. Consequently, these spiked requests from a subset of clients can starve the other clients of their fair share. This is where rate limiting and throttling are introduced. In simple words, rate limiting and throttling is to controlling and regulating the number of calls to an API or the amount of traffic to an application/server.

Rate limits are a common practice by API providers, varying according to different criteria, such as the provider’s business model, SLA, or infrastructure capacity.

For example, by implementing the leaky bucket algorithm, GitHub’s API identifies the number of remaining requests in the client’s bucket along with the estimate of refilling time. When receiving a 429 status code response (Too Many Requests), the client can sleep until refill time and then retry. Similarly, Twitter API has shared a comprehensive list of rate limits it applies to developers. Their rate-limiting requests are per 15-minute window for v2 standard basic access. LinkedIn API is not as detailed as Twitter, but they also state the calls made by clients per day vary based on the type of request clients are making. Heroku, using the GCRA algorithm, allows requests to be evenly spread out and utilizes exponential backoff for their rate-throttling algorithm.

The foremost reason for server-side rate limiting and throttling is to maintain service quality and avoid system overload by allowing fixed API calls over time. Without rate limiting, users can make as many requests as they want, which might lead to a system crash or a lagging service. Rate limiting ensures the availability of service to all clients by avoiding resource starvation.

Another important aspect is security. Malicious overuse can be controlled by limiting user calls. This helps against certain cyber-attacks, such as DDoS, web scraping, brute force, credential stuffing, and do so on. APIs with sensitive data must be rate limited.

So, if the API provider already limits their customers' consumption, why does the customer need to implement throttling policies as well? In other words, why do we need client-side throttling?

Client-side Throttling

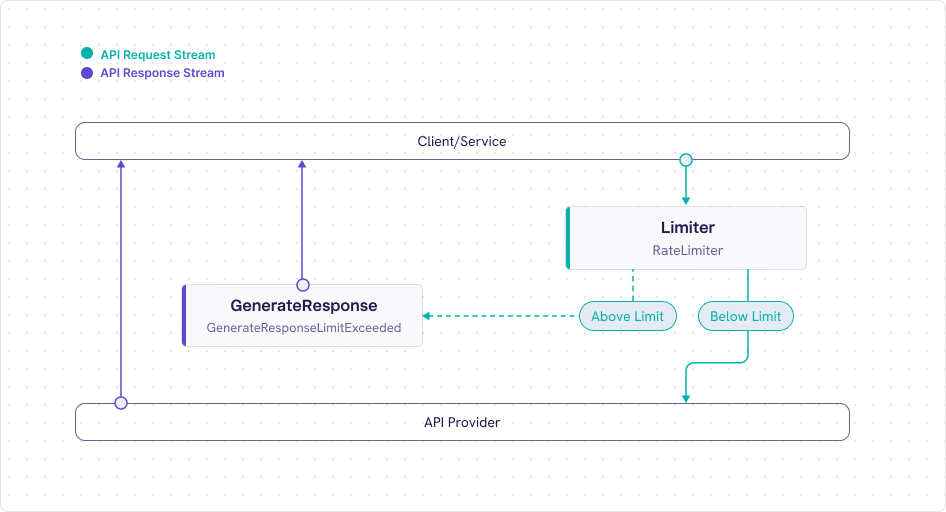

As we have said, API providers utilize rate limiting to regulate the traffic of incoming API calls and throttle their clients if the rate of incoming calls exceeds the limit. The key differentiating factor is the direction of the client-server relationship.

If the client exceeds the number of calls per window time, those calls will receive a response with a 429 “Too Many Requests” status code. This indicates either the client is making too many calls or the rate limits offered by the server are too low. The client must respect this and adopt coping mechanisms like retries or exponential backoff.

And so, instead of waiting to crash into the “rate limit wall”, which can be unexpected without proper visibility, it’s better for the client (meaning the API consumer) to be proactive and define their consumption policy. In other words, this means defining by which metrics they will decide when to throttle consumption and being aware beforehand when they will be expected to reach the rate limit.

Client-side throttling has prominent advantages, mainly around:

(I) Controlling the Consumption Logic

Each company consuming external APIs has its own business logic to it, which means that consumption correlates with specific business needs. Controlling the consumption logic means to actively define the policy by which the client makes the API calls and throttle when close to reaching the rate limit.

Examples for questions to ask when implementing client-side throttling might be:

- Is there a priority that I can give every API call? If API calls are done for multiple services, can we prioritize the API calls made from one service over the other, assuming that the API resource is limited?

- Can I delay some API calls and perform them at a later stage? If I’m expected to reach a rate limit, can I save some API calls in a delayed queue and perform them when there’s less API traffic (perhaps at night or over the weekend?)

- Is there a better throttling algorithm I can use for my needs? There are different throttling algorithms that fit different use cases. One example is smoothing traffic bursts .

- Do I want to be aware and notified before hitting the rate limit? Knowing in advance how close I am to the rate limit or when I’m expected to encounter it can help me better prepare for it.

- Is there an SLA policy I am confined to? Client-side throttling helps meet system-level agreements (SLA) by providing a fair share of resources to clients while remaining within expected bounds.

(II) Lowering Costs

Client-side throttling helps lower costs by controlling expenditures in the events of resource misconfiguration, avoiding massive server bills or pager notifications.

Also, think about API tiers; some APIs are changing their costs when consuming more than the defined quota, moving the client to another tier. Knowing in advance when to stop, or how to throttle in order to stay in the right tier, is a measure worth taking.

Tracking the Rate Limit

API mostly indicates how close a client is to the rate limits in 3 ways:

(I) Response Header

Some API providers, such as GitHub, implement the rate limit and the margin left until hitting that limit in the response header.

§ curl -0 https://api.github.com/users/octocat/orgs

HTTPS /2 200

Server: nginx

Date: Fri, 12 Oct 2012 23:33:14 GMT

Content-Type:application/json; charset=utf-8

ETag: "a00049ba79152d03380c34652f2cb612"

X-GitHub-Media-Type: github.v3

x-ratelimit-limit: 5000

x-ratelimit-remaining: 4987

x-ratelimit-reset: 1350085394

content-Length: 5

Cache-Control: max-age=0, private, must-revalidate

X-Content-Type-Options: nosniffIt is clear that GitHub provides the following information in the header of every API response:

- The rate limit threshold, i.e., the number of API calls (x-ratelimit-limit)

- The number of remaining API calls until the rate limit (x-ratelimit-remaining)

- The time interval until the rate limit reset (x-ratelimit-reset)

However, do note that each API provider uses slightly different header names. For example, take a look at Twitter’s API (note the extra hyphen):

x-rate-limit-limit: 40000

x-rate-limit-remaining:39951

x-rate-Limit-reset: 1666558688And there are also other headers such as “x-rate-limit-interval.”

The variety of headers that API providers are implementing makes it a challenge to define a unified throttling policy across multiple APIs or might require dedicated handling for each consumed API.

(II) User Panel

Some API providers, such as Okta, provide visibility to the rate limits usage over time in their user panel.

.png)

(III) Documentation

A lot of API providers will have the right documentation in place for developers to know what the rate limits are. Even so, many APIs lack proper documentation and lack to reflect the boundaries of their service in a clear manner.

Throttling Algorithms

As Richard Schneeman aptly states, “If you provide an API client that doesn't include rate limiting, you don't really have an API client. You've got an exception generator with a remote timer.”

Impactful throttling strategies are crucial for system efficacy. According to Heroku some prominent points to consider while implementing throttling are as follows:

- Minimize average retry rate: A better user experience is ensured with fewer client-side errors and failed requests.

- Minimize maximum sleep time: The maximum time taken to process a request should be minimal, i.e., less throttling time.

- Minimize variance of request count between clients: All the API requests need to be processed fairly. No client should feel detached from the distributed system.

- Minimize time to clear a large request capacity: Throttling needs to be effectively adjusted with system changes owing to capacity requirements.

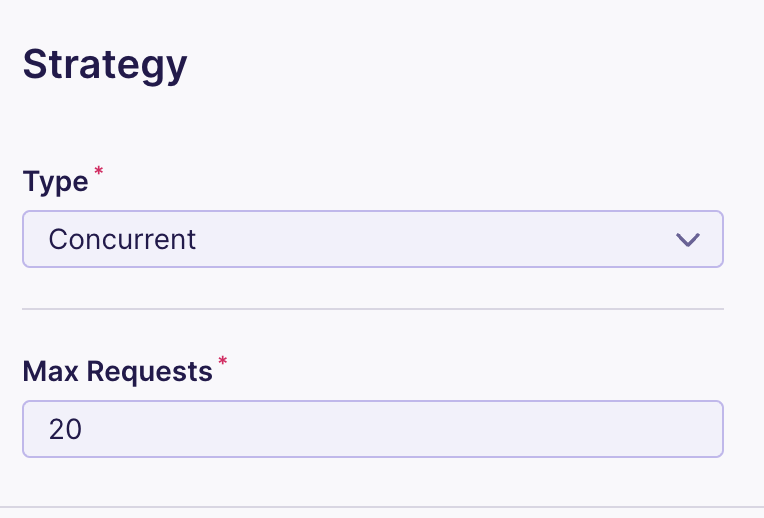

The throttling strategy on the client side highly depends on rate-limiting algorithms deployed on the server side. Here’s a list of some of the commonly used algorithms to consider when implementing the right throttling strategy:

(I) Leaky Bucket Algorithm

In this algorithm, rate limiting is done by queuing API calls. The requests are treated as being placed in a bucket and processed in a first-in first-out (FIFO) manner at regular intervals. When the bucket is brimming with requests, it leaks. That means that once the API calls reach the full capacity of the bucket, further requests are discarded. The algorithm ensures efficient memory handling with respect to queue size constraints. It is also feasible with small servers and load balancers. However, as it is based on FIFO, requests from a single client or older requests can stuff the queue, leaving no room for new requests.

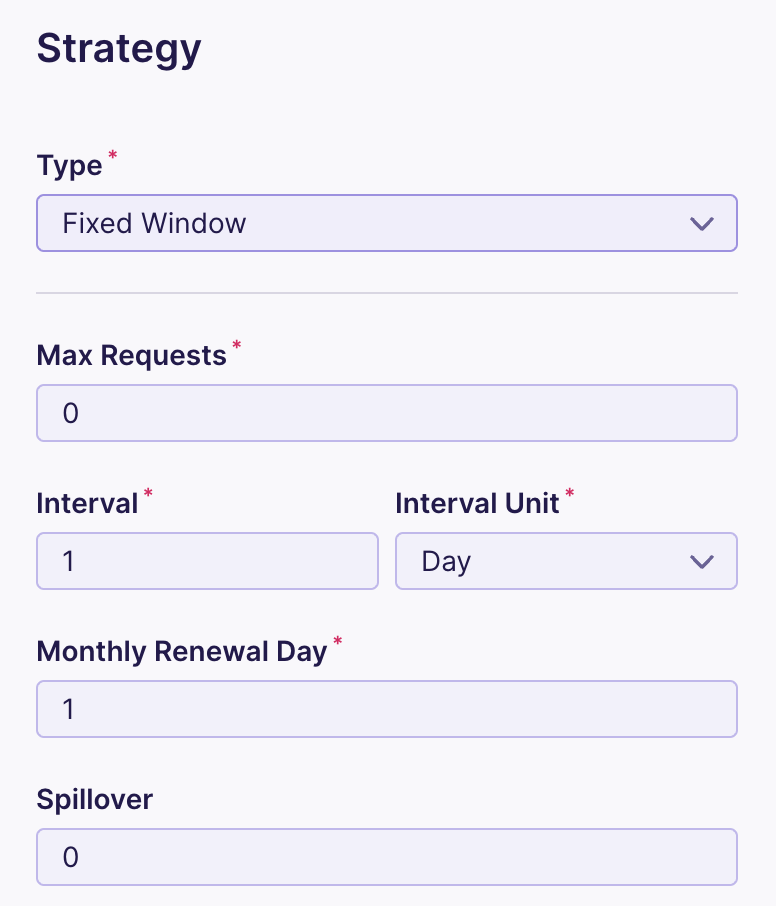

(II) Fixed Window Algorithm

This algorithm consists of a fixed window, say n seconds, to regulate the API calls. The window has a counter that is incremented with each incoming request until it reaches a threshold value. The requests after the said value are discarded.

The fixed window algorithm ensures the processing of more recent requests compared to the leaky bucket. However, a burst near a window’s boundary may cause issues resulting in twice the processing rate of requests, allowing both current and next window. Chaos can also be caused by an increased number of clients waiting for a reset window.

(III) Sliding Window Algorithm

The sliding window algorithm adds the improved boundary conditions to the fixed window algorithm to resolve the boundary issues. The counter is updated for each request in a similar manner, whereas a timestamp-based weighted value of the prior window’s request rate is added. This ensures smoothing of the traffic bursts and allows flexible scaling across massive clusters. Both the starvation issue of the leaky bucket and the traffic bursts of the fixed window algorithm are addressed in the sliding window algorithm, leading to effective performance and scalability.

Maximize Efficiency with Client-Side Throttling

Let's Talk!

Although rate limiting and throttling are key concepts to regulate API calls, client-side throttling provides many benefits. The efficacy of a system highly depends upon the ability of developers to handle throttling and implement impactful systems. By managing the rate of API calls to commercial services that incur usage-based charges, it also guarantees a cost-control mechanism ultimately leading to cost-effectiveness. Furthermore, this regulates the resources, reduces client-side errors, and enhances the user experience.

Do you want to learn more about how to implement client-side throttling or how to build the throttling logic that fits your logic? Don’t hesitate to contact us.

Ready to Start your journey?

Manage a single service and unlock API management at scale