.webp)

How to Reduce Back-Offs Caused by 429 Errors by 50%?

This blog post aims to address the technical challenge of API consumption, specifically when consuming at scale without proper management. The scenario we will be discussing involves the parallel consumption of third-party APIs from multiple services, leading to a proliferation of exponential cool-down periods. Effective management of API calls in response to the '429' rate limit can result in a substantial reduction in cool-down durations.

Recap - How Rate Limit Works?

Rate limits act as control mechanisms for the amount of incoming or outgoing traffic in a network. For example, an API rate limit might restrict the number of requests to 100 per minute. When this limit is exceeded, an error message is generated to inform the requester that they have exceeded the allowed number of requests within a specific time frame.

In the case of HTTP API requests, this error is typically indicated by a response with a 429 status code, which is designated by RFC 6585 as "Too Many Requests." The server typically sends this response along with information about the allowed request rate and a header called "Retry-After" to indicate the waiting time until the next request can be made.

Although not mandatory, following the best practices outlined in the RFC spec, such as using the "Retry-After" header, is a good protocol for keeping users informed about the network's requirements.

What’s the Retry-After Header?

The Retry-After response HTTP header indicates how long the user agent should wait before making a follow-up request. There are three main cases in this header is used:

- When sent with a 503 (Service Unavailable) response, this indicates how long the service is expected to be unavailable.

- When sent with a 429 (Too Many Requests) response, this indicates how long to wait before making a new request.

- When sent with a redirect response, such as 301 (Moved Permanently), this indicates the minimum time that the user agent is asked to wait before issuing the redirected request.

We will focus this blog post on improving the 429 (Too Many Requests) response, which revolves around exceeding the rate limit.

Some APIs also contain specific headers that can track the current state in regard to the rate limit:

The Problem

Let’s take a scenario of multiple services consuming 3rd party API, which has its own rate limits.

The scenario under consideration involves multiple services utilizing the Outlook API, which has a rate limit of 10,000 requests per 10-minute period. This translates to a maximum of 10,000 requests to a single user's mailbox within a 10-minute window.

A throttling mechanism has likely been implemented to mitigate reaching the API's rate limit, as per Microsoft's recommendations such as increasing page size or reducing the number of GET requests for individual items. However, over time, the retry-after cool-down period increases, leading to an exponential cool-down and resulting in a significant increase in the duration required to clear a workload of API calls within a given frame.

the root-causes for this exponential increase can be one of the following:

1. Non-compliance with the retry-after response header in single API call implementation

Subsequent API calls being made without adhering to the retry-after boundaries. This can occur even if the service complies with the 429 retry after protocol, but a developer introduces new code that calls the API and fails to consider the retry-after use case.

2. Concurrent Consumption of API by Multiple Services

The scenario involves multiple services consuming the same API provider in parallel. This implies that each service may be subject to a "retry-after" wait time, but since the services are consuming the same API (using the same API token) without coordinating a unified "retry" state among them, it results in a rapid exponential increase in back-off across all API consumers.

The result - The outcome of Service A's subsequent API request is of particular significance. As anticipated, upon receiving a 429 response from the API provider with a "Retry-After" value of 10 seconds, Service A's subsequent API call made 10 seconds later, as directed, should have elicited an immediate response.

However, the concurrent consumption of the same resource by Service B and Service C led to a 400% increase in the "Retry-After" values, resulting in a significant prolongation of the cool-down period for Service A on its subsequent API request. This pattern of exponential cool-down time increase is similarly applicable to the consecutive API requests made by Service B and Service C.

Explanation to the Sequence of Events

A closer look at the API traffic will looks as follows:

- A significant number of API calls are being initiated, leading to the receipt of 429 error responses by the client. The API provider sends multiple consecutive responses with the Retry-after header, indicating a required 10-second delay before the next retry.

- The consecutive responses of retry-after 10 seconds suggest that multiple services are consuming the same API provider simultaneously.

- The multiple services consuming the API are experiencing an excessive number of 429 responses, resulting in a majority of API responses being the "429" response.

- As evidenced by the graph, this has resulted in the majority of new API calls not being processed, indicated by the low volume of "200" responses.

- The effect is an increase in cool-off times, leading to longer periods before the next API call can be successfully processed and impacting the service's SLA.

- Despite having a throttling mechanism in place, exponential back-off is triggered, and the API calls are not synchronized. The Retry-after time increases to 210 seconds.

The result - An exponential back-off kicked-off, causing the workload to be clear in 15 minutes, rather than 7 minutes if no exponential rate limit had occurred.

The Solution - “Consumer-Side 429 Responder”

Solution Description

The solution offered to those 2 scenarios are referred by us as a “Consumer-side 429 Responder Proxy”, or in short “Consumer-side 429”.

This is a proxy that holds a shared state of the API calls traffic for all services consuming the same API provider. The responsibility of this proxy is to tunnel the traffic between the API consumers (services) and the API provider, according to a defined policy that state that:

“API calls to the API provider are prohibited until the cool-down period has ended. Any API calls made during the cool-down period, regardless of the Service, will be met with a simulated 429 response generated by the "Consumer-side 429 Responder Proxy," in accordance with the global retry-after value state..”

Let’s see the sequence of events in action, now that the “Consumer-side 429” is in place.

- Service A initiates the first API request to the third-party API provider.

- The API request passes through the "Consumer-side 429" which maintains a shared state of the retry-after for all services consuming the same third-party API provider. The API request is then routed to the third-party API provider.

- As the rate limit has been reached, the API provider responds with a "429" API response, indicating a required 10-second delay before the next API call.

- Two seconds into the sequence, Service B independently initiates an API request to the API provider.

- As the 10-second cool-down period is ongoing, the “Consumer-side 429” generates an API response without forwarding Service B's original request to the API provider.

- This is done to prevent increasing the cool-down period by making an API call during the cool-down.

- The API response sent to Service B is not from the original API provider, but rather a generated response made by the “Consumer-side 429 Proxy” itself.

- Maintaining a shared state among all services consuming the same API provider ensures the rate-limit threshold is not met, resulting in minimal cool-down times without the risk of exponential increases.

- Service C initiates an API call 4 seconds later in the sequence.

- The “Consumer-side 429” responds with a generated 429 response indicating a retry-after of 6 seconds (at the end of Service A's original cool-down period).

- Service A waits for its 10-second cool-down period to pass and then initiates a consecutive API call to the third-party API provider.

- The “Consumer-side 429” forwards Service A's API call to the third-party API provider.

The result - Service A, B, C can make API calls to the 3rd party API provider without triggering exponential cool-downs, which results in performance and SLA improvement.

Explanation to the Sequence of Events in Graphs

- A peak in API consumption occur until reaching unexpectedly the API provider’s rate limit.

- As a result, the API provider send back the first 429 response, stating that the API consumer should try to make API calls again 10 seconds later.

- Another batch of increasing API calls traffic is emerging, but this time the volume of API request never exceeds the rate limit, hence no 429 response for “too many requests” are being made.

- Because consumption is kept below the rate limit threshold, the retry-after values do not increase above the initial 10 seconds duration.

- Even when there’s an unexpected peak of traffic, like shown in section 4 of the graph, still the retry-values remains up to 10 seconds or below.

- The reason why there’s an increase in 429 responses (colored in red in the graph) is because those are actually the generate 429 responses by the “Consumer-side 429 Responder Proxy”, and that’s the reason why in spite on a 429 responses increase, the retry-values does not exponentially increase in correlation.

Conclusion

The scenario of excessive API consumption, which can peak unexpectedly, is a common issue. As we have seen, a sudden increase in API requests, not bounded by the "Retry-After" header sent back by the API provider, can result in an exponential increase in the cool-down time until the next API call can be processed. Neglecting to monitor API consumption and lack of resilience mechanisms can have a significant impact on the Service Level Agreement (SLA). The exponential increase in "Retry-After" as shown in this blog post can result in severe consequences for the duration of a service's batch API calls.

A best practice, both for multiple services consuming the same API provider or even a single service, is to have a unified shared state proxy, referred to as a “Consumer-side 429 Responder Proxy” to manage the traffic workload according to a defined policy. This ensures that cool-down times are kept within the user's defined limits.

If you are interested in learning and implementing more best practices to control and optimize your API consumption, reach out to us. Our experts will be happy to discuss your use-cases and help find a solution.

Ready to Start your journey?

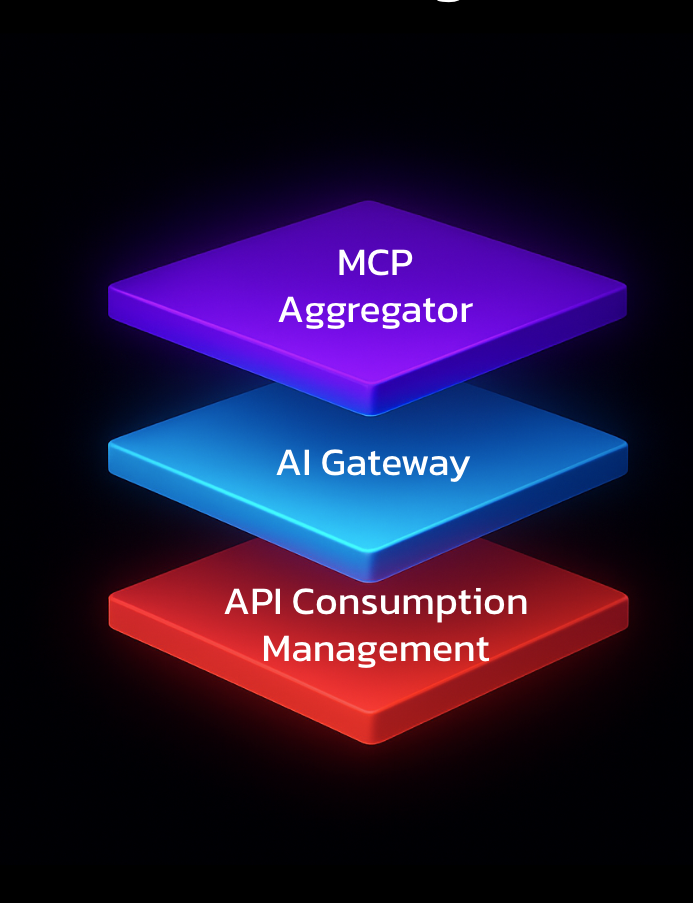

Manage a single service and unlock API management at scale