AI Gateways: Prepare for GenAI without Getting Lost in the Moonscape

Innovation and Insights on AI Gateways

Technology is changing faster than a toddler discovering a room full of buttons (and yes, some buttons are definitely not for pushing!). At the heart of this whirlwind of change lies the futuristic road of AI and agentic capabilities, fueling SaaS products and startup innovation like a rocket on hyperdrive.

Speaking of AI, a recent publication from Gartner, "Innovation Insight: AI Gateways" caught my eye for two reasons. First, this report reinforces a point worth driving home to your engineering organization and software architects: the rise of AI demands an infrastructure mindset, not just a developer's one. Second reason this article captured our full attention, (shameless plug ahead), Lunar.dev, a much smaller player in the API landscape among Kong, Cloudflare, and even IBM (all clearly seeing the writing on the AI wall), got a shoutout as a key player in this evolving landscape!

For a while now, we, at Lunar.dev have been advocating for dedicated mediation layer, call it an orchestration layer managed by "API Consumption Gateway" (or "reverse API Gateways" if you prefer) to manage API consumption. If this piques your interest, check out my latest Forbes piece on the topic.

So, let's leverage the lessons learned from the trenches (and Gartner's valuable insights) to explore the features of an AI Gateway, the potential risks of adoption, and Lunar.dev's unique perspective on this.

Going Forward: Traditional vs. Dedicated Gateways

Traditional API gateways have long served as intermediaries, managing inbound traffic to ensure security, scalability, and performance. However, with the surge in AI adoption and the proliferation of third-party APIs, there's a pressing need for a specialized infrastructure component to handle outbound API consumption. Gartner's report highlights this distinction, emphasizing that while traditional API gateways focus on incoming traffic, dedicated API gateways—often referred to as reverse API gateways—are essential for managing outbound interactions.

Why Do You Need an AI Gateway?

Gartner's report perfectly captures the pain points organizations face:

- Uncontrolled Costs: Usage-based pricing models for AI services can quickly spiral out of control.

- Limited Visibility: Without a central point of control, it's difficult to track AI usage across your organization.

- Security Risks: API keys and data privacy require vigilant protection.

- Developer Friction: Integrating multiple AI APIs can be complex and time-consuming.

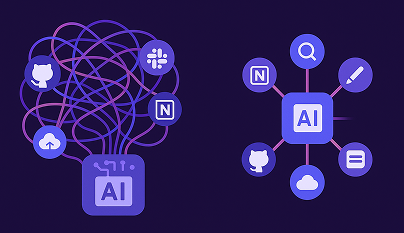

Enter the AI Gateway: Your Central Hub for AI Interactions

An AI Gateway serves as a central hub for your AI interactions, bridging your applications with various AI providers to streamline operations. It offers robust security by protecting API keys, enforcing data masking and encryption, and preventing data exfiltration, ensuring a secure environment.

Additionally, it provides enhanced visibility through detailed analytics and reporting, offering valuable insights into your organization's AI usage. By simplifying development with standardized APIs and model-agnostic routing, developers can effortlessly access multiple AI services. Collectively, these features enable efficient cost management, keeping expenses in check through token optimization, caching, and throttling.

Key Features Highlighted by Gartner

Gartner's report outlines several critical features that dedicated API gateways should encompass:

- Support for AI Tokens: Tracking and reporting token usage, implementing rate-limiting controls based on tokens, and optimizing prompt queries to reduce token consumption.

- Response Caching: Reducing the number of API calls and improving response times by caching previous responses.

- Portal for AI Services: Providing a dedicated developer portal for AI services, enabling self-service discovery and registration for access to AI models.

- AI Service Mediation: Transforming and routing requests based on defined policies, such as incorporating retrieval-augmented generation (RAG) and fine-tuning to improve responses.

- Request and Response Guardrails: Implementing model guardrails to validate and rectify AI service inputs and outputs, filtering out poor quality or offensive responses.

Security Controls: Providing data masking, encryption, and data exfiltration detection to prevent sensitive data from being sent to AI services.

At Lunar.dev, we've recognized this paradigm shift and have developed a robust platform tailored to manage outbound API consumption effectively. Our solution offers a comprehensive suite of features designed to address the complexities of modern API interactions:

- Unified API Consumption Management: Our platform provides full visibility and control over all third-party API calls across environments and departments. By integrating seamlessly with existing monitoring stacks like Datadog and Prometheus, we offer a centralized view of API interactions.

- Advanced Traffic Control and Optimization: We enable the enforcement of rate limiting, quota management, concurrency control, and caching through pre-configured flows. This ensures efficient API call management across distributed environments and services. For instance, our Client-Side Limiting Flow allows you to define quotas and manage API consumption effectively.

- Scalability and Resilience: Built atop your existing infrastructure, Lunar.dev is designed to handle high volumes, reduce in-production errors, and minimize maintenance time. Our platform supports multiple installations, working with Redis to provide a shared state, and includes fail-safe mechanisms like pass-through or API re-routing on timeouts to prevent data loss or service disruption.

- Security and Compliance: We enforce security policies such as TLS, mTLS, and authentication tokens consistently across all external communications. By routing traffic through our gateway, we ensure that API requests meet the company's security and compliance standards, safeguarding sensitive data.

Addressing the Risks in Adopting AI Gateways

While AI gateways offer significant advantages in managing outbound API traffic, it's essential to acknowledge and address the inherent risks associated with their adoption. At Lunar.dev, we proactively tackle these challenges to provide a resilient and adaptable solution.

1. Navigating the Novelty and Scalability of AI Gateways

AI gateways are relatively new components within AI architectures, and their large-scale deployment remains unproven. Organizations might consider alternative approaches or rely on existing API gateways for simpler tasks like rate limiting.

Lunar.dev's Approach:

Recognizing our critical role in your AI interactions, we've engineered Lunar.dev to endure scale and ensure resilience. Our platform incorporates fail-safes, gateway redundancy, and load balancing to maintain system integrity under varying loads. By self-hosting, we reduce latency and enhance control over your infrastructure. Built on the robust HAProxy foundation, Lunar.dev delivers high performance and reliability. More about our architecture can be found here.

2. Adapting to the Evolving AI Landscape

As AI usage evolves, new requirements emerge, and vendors prioritize different features. It's crucial to evaluate AI gateways thoroughly, ensuring they align with your functional and nonfunctional requirements, performance metrics, and IT architecture.

Lunar.dev's Approach:

Understanding the fast-paced nature of AI, we've designed Lunar.dev to be modular and extensible - we called the concept ‘Lunar Flows’ . Currently, our platform supports predefined flows, and we're committed to empowering customers to extend functionality according to their business logic in upcoming releases. This flexibility ensures that Lunar.dev can adapt to your evolving needs, providing a tailored solution that grows with your organization.

3. Mitigating Latency Concerns

Latency is a critical issue for intermediaries, especially in AI applications where users are sensitive to delays. While AI gateways can optimize metrics like time-to-first token and tokens per second, they may also introduce additional latency. Caching AI responses can help but presents complexities due to varying prompts across AI models.

Lunar.dev's Approach:

We've built Lunar.dev to minimize its impact on traffic and latency. By self-hosting, we shave off latency time, and our foundation on HAProxy supports high-speed processing. Our benchmarking results demonstrate that Lunar.dev adds only approximately 4 milliseconds of latency at the 95th percentile (check out the benchmarks), ensuring swift and efficient API interactions.

Gartner's "Innovation Insight: AI Gateways" report highlights the necessity of specialized infrastructure to manage outbound API consumption effectively. Lunar.dev is at the forefront of this movement, offering robust solutions to navigate these complexities.

Key Takeaways:

- Cost Management: AI Gateways help control expenses through token optimization, caching, and throttling.

- Enhanced Visibility: They provide detailed analytics, offering insights into your organization's AI usage.

- Robust Security: Protecting API keys and enforcing data masking and encryption ensures a secure environment.

As AI continues to integrate into various sectors, adopting AI Gateways becomes crucial for efficient and secure operations. Lunar.dev is committed to guiding organizations through this transition with innovative solutions.

Ready to optimize your AI interactions? Visit Lunar.dev to learn more.

Ready to Start your journey?

Manage a single service and unlock API management at scale